Autonomous Vehicle

About

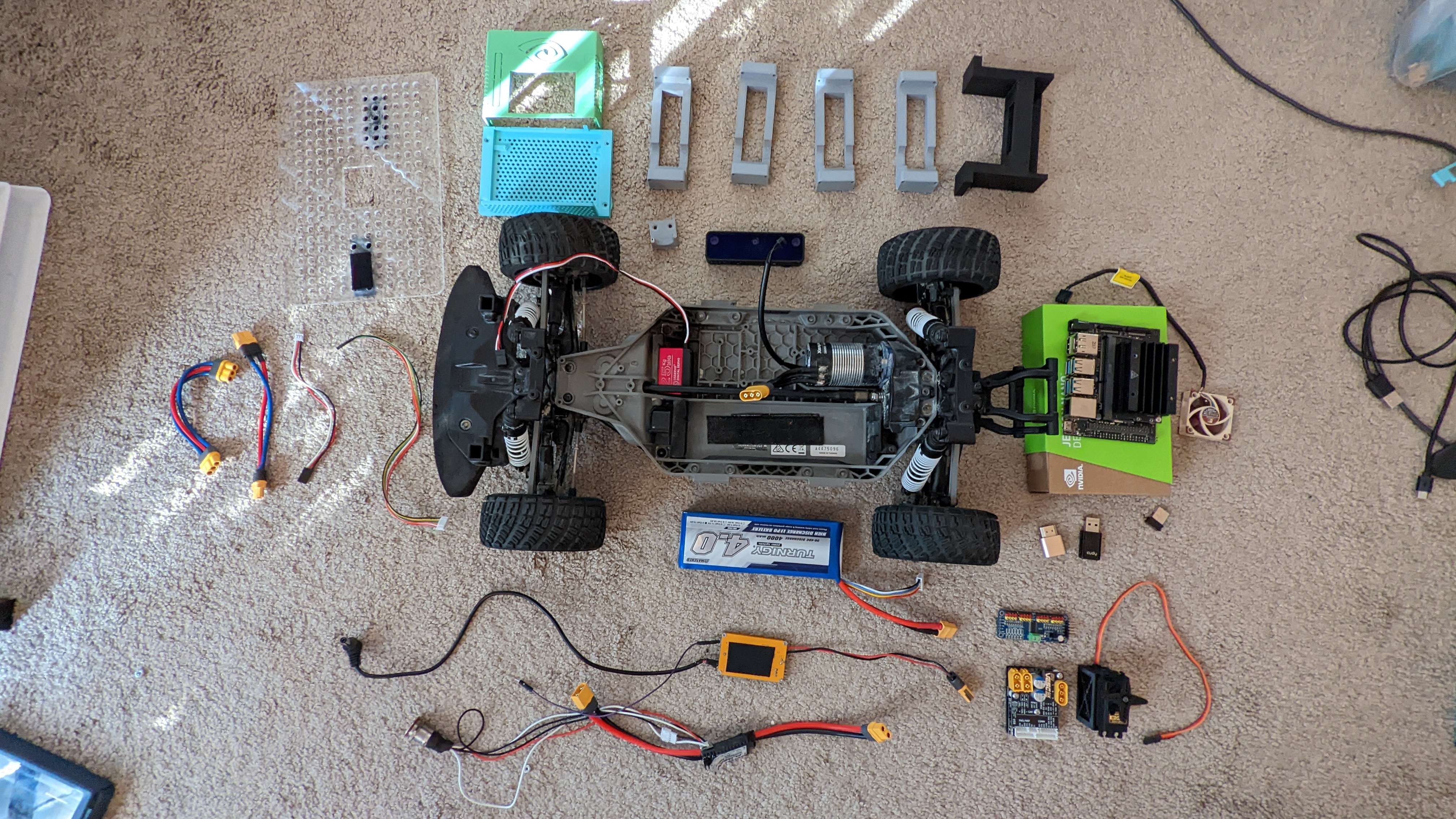

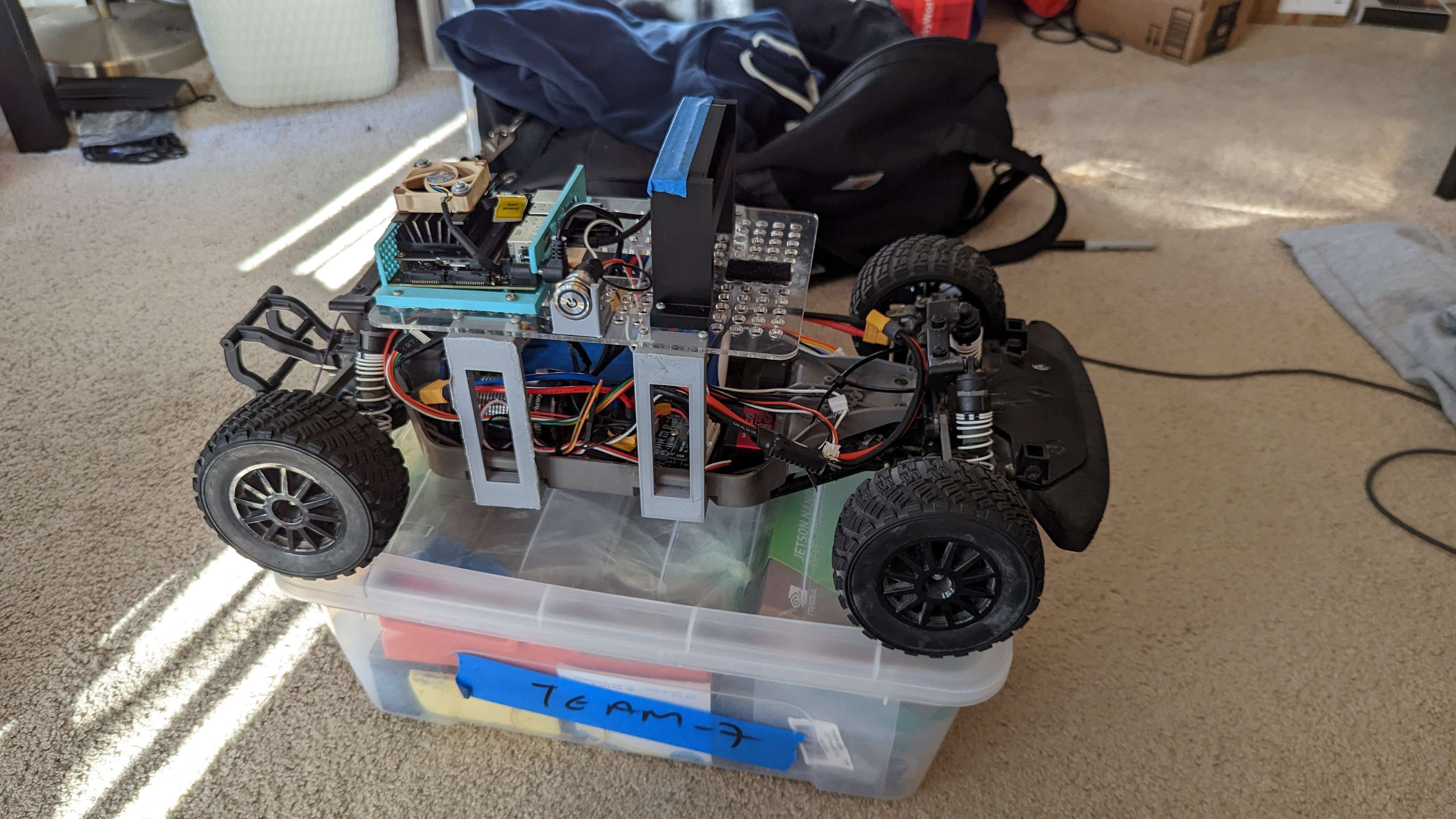

This was a project done by 3 people to convert a basic RC Car with just a motor, servo, and battery into an Autonomous Vehicle. Some notable components that allowed for this conversion are:

- Camera that can output video

- NVIDIA Jetson Nano

- VESC (Variable/Vedder Electric Speed Controller)

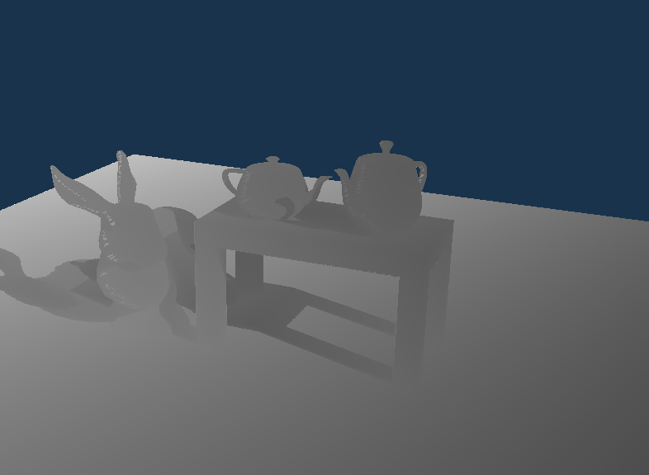

Three Autonomous Laps using Python and OpenCV

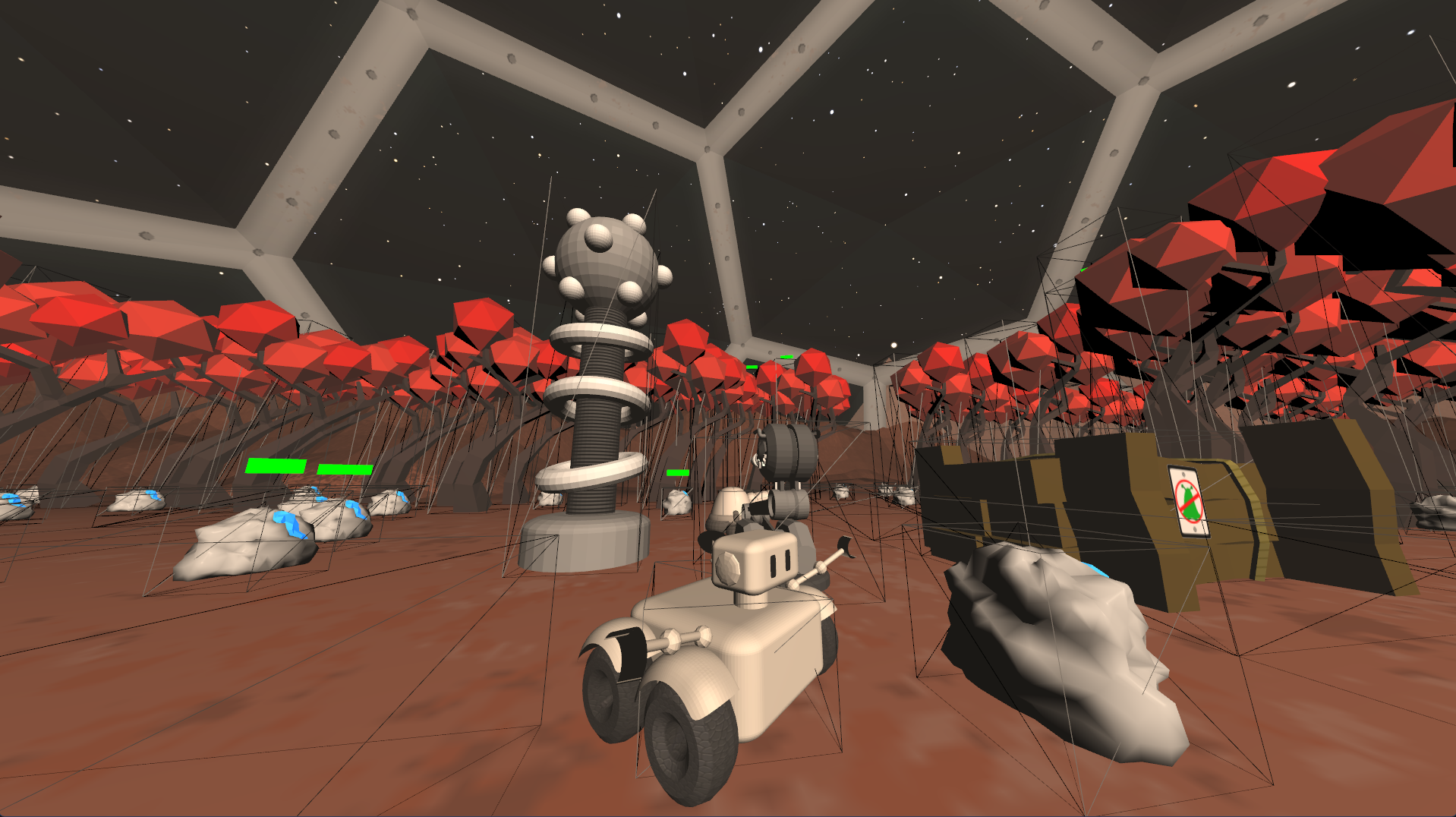

To accomplish this project, I used OpenCV to filter the video feed and detect lane lines on both sides of the car. From that, I would receive two lines in which I calculated the bisector whose angle would be used to steer our car. The following video shows 3 autonomous laps using this method.

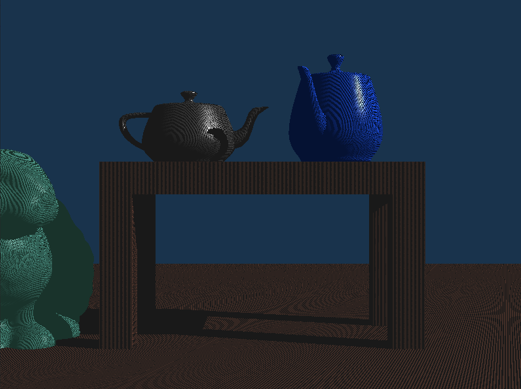

Wildlife Conservation Protocol

Another fun project we did was using object detection to adjust driving behavior. For the object detection I used the COCO (Common objects in context) pre-trained model in order to detect animals such as a raccoon and pedestrians. This combined with a simple form of distance estimation, I am able to program the different behaviors according to the object immediately in front of the vehicle seen in the video below. Enjoy!